import pandas as pd

import numpy as np

import sklearn

import pickle

import time

import datetime

import warnings

warnings.filterwarnings('ignore')imports

%run ../function_proposed_gcn.pywith open('../fraudTrain.pkl', 'rb') as file:

fraudTrain = pickle.load(file) df50 = throw(fraudTrain,0.5)

df_tr, df_tst = sklearn.model_selection.train_test_split(df50)

dfn = fraudTrain[::10]

dfn = dfn.reset_index(drop=True)

df_trn, df_tstn = sklearn.model_selection.train_test_split(dfn)df_tr.shape,df_tstn.shape((9009, 22), (26215, 22))df2, mask = concat(df_tr, df_tstn)

df2['index'] = df2.index

df = df2.reset_index()df.is_fraud.mean(), df_tr.is_fraud.mean(), df_tstn.is_fraud.mean()(0.1331762434703611, 0.5034965034965035, 0.005912645432004577)groups = df.groupby('cc_num')edge_index = np.array([item for sublist in (compute_time_difference(group) for _, group in groups) for item in sublist])

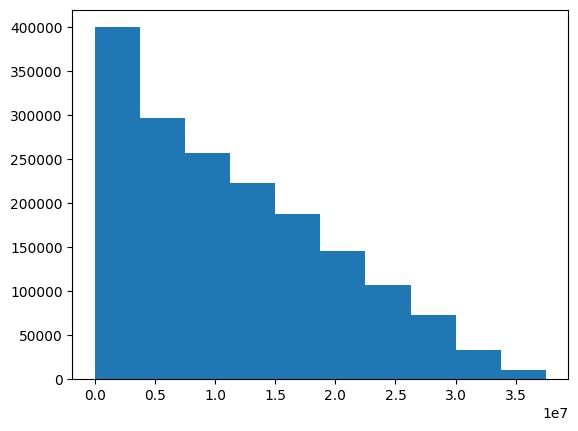

edge_index = edge_index.astype(np.float64)edge_index[:,2].mean()11406996.079461852plt.hist(edge_index[:,2])(array([400006., 296322., 257056., 223416., 187514., 145170., 106978.,

72762., 33480., 10354.]),

array([ 0., 3750444., 7500888., 11251332., 15001776., 18752220.,

22502664., 26253108., 30003552., 33753996., 37504440.]),

<BarContainer object of 10 artists>)

theta = edge_index[:,2].mean()edge_index[:,2] = (np.exp(-edge_index[:,2]/(theta)) != 1)*(np.exp(-edge_index[:,2]/(theta))).tolist()gamma = 0.8edge_index = torch.tensor([(int(row[0]), int(row[1])) for row in edge_index if row[2] > gamma], dtype=torch.long).t()x = torch.tensor(df['amt'].values, dtype=torch.float).reshape(-1,1)

y = torch.tensor(df['is_fraud'].values,dtype=torch.int64)

data = torch_geometric.data.Data(x=x, edge_index = edge_index, y=y, train_mask = mask[0], test_mask= mask[1])model = GCN1()

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

yy = (data.y[data.test_mask]).numpy()

yyhat, yyhat_ = train_and_evaluate_model(data, model, optimizer)

yyhat_ = yyhat_.detach().numpy()

eval = evaluation(yy, yyhat, yyhat_)eval{'acc': 0.9121495327102803,

'pre': 0.059113300492610835,

'rec': 0.9290322580645162,

'f1': 0.11115399459668081,

'auc': 0.9698868615849281}result = {

'model': 'GCN',

'time': None,

'acc': eval['acc'],

'pre': eval['pre'],

'rec': eval['rec'],

'f1': eval['f1'],

'auc': eval['auc'],

'graph_based': True,

'method': 'Proposed',

'throw_rate': df.is_fraud.mean(),

'train_size': len(df_tr),

'train_cols': 'amt',

'train_frate': df_tr.is_fraud.mean(),

'test_size': len(df_tstn),

'test_frate': df_tstn.is_fraud.mean(),

'hyper_params': None,

'theta': theta,

'gamma': gamma

}

#df_results = df_results.append(result, ignore_index=True)df50 = throw(fraudTrain,0.5)df_tr, df_tst = sklearn.model_selection.train_test_split(df50)dfn = fraudTrain[::10]

dfn = dfn.reset_index(drop=True)

df_trn, df_tstn = sklearn.model_selection.train_test_split(dfn)df2, mask = concat(df_tr, df_tstn)

df2['index'] = df2.index

df = df2.reset_index()groups = df.groupby('cc_num')

edge_index = np.array([item for sublist in (compute_time_difference(group) for _, group in groups) for item in sublist])

edge_index = edge_index.astype(np.float64)

edge_index[:,2] = (np.exp(-edge_index[:,2]/(theta)) != 1)*(np.exp(-edge_index[:,2]/(theta))).tolist()

edge_index = torch.tensor([(int(row[0]), int(row[1])) for row in edge_index if row[2] > gamma], dtype=torch.long).t()x = torch.tensor(df['amt'].values, dtype=torch.float).reshape(-1,1)

y = torch.tensor(df['is_fraud'].values,dtype=torch.int64)

data = torch_geometric.data.Data(x=x, edge_index = edge_index, y=y, train_mask = mask[0], test_mask= mask[1])

model = GCN1()

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

yy = (data.y[data.test_mask]).numpy()

yyhat, yyhat_ = train_and_evaluate_model(data, model, optimizer)

yyhat_ = yyhat_.detach().numpy()

eval = evaluation(yy, yyhat, yyhat_)eval{'acc': 0.9183673469387755,

'pre': 0.06701708278580815,

'rec': 0.9386503067484663,

'f1': 0.12510220768601796,

'auc': 0.9712453337779372}