import pandas as pd

import numpy as np

import sklearn

import pickle

import time

import datetime

import warnings

from autogluon.tabular import TabularDataset, TabularPredictor

warnings.filterwarnings('ignore')imports

df_train1 = pd.read_csv('~/Dropbox/Data/df_train1.csv')

df_train2 = pd.read_csv('~/Dropbox/Data/df_train2.csv')

df_train3 = pd.read_csv('~/Dropbox/Data/df_train3.csv')

df_train4 = pd.read_csv('~/Dropbox/Data/df_train4.csv')

df_train5 = pd.read_csv('~/Dropbox/Data/df_train5.csv')

df_train6 = pd.read_csv('~/Dropbox/Data/df_train6.csv')

df_train7 = pd.read_csv('~/Dropbox/Data/df_train7.csv')

df_train8 = pd.read_csv('~/Dropbox/Data/df_train8.csv')

df_test = pd.read_csv('~/Dropbox/Data/df_test.csv')(df_train1.shape, df_train1.is_fraud.mean()), (df_test.shape, df_test.is_fraud.mean())(((734003, 22), 0.005728859418830713), ((314572, 22), 0.005725239372862174))_df1 = pd.concat([df_train1, df_test])

_df2 = pd.concat([df_train2, df_test])

_df3 = pd.concat([df_train3, df_test])

_df4 = pd.concat([df_train4, df_test])

_df5 = pd.concat([df_train5, df_test])

_df6 = pd.concat([df_train6, df_test])

_df7 = pd.concat([df_train7, df_test])

_df8 = pd.concat([df_train8, df_test])_df1_mean = _df1.is_fraud.mean()

_df2_mean = _df2.is_fraud.mean()

_df3_mean = _df3.is_fraud.mean()

_df4_mean = _df4.is_fraud.mean()

_df5_mean = _df5.is_fraud.mean()

_df6_mean = _df6.is_fraud.mean()

_df7_mean = _df7.is_fraud.mean()

_df8_mean = _df8.is_fraud.mean()def auto_amt_ver0503(df_tr, df_tst, _df_mean):

df_tr = df_tr[["amt","is_fraud"]]

df_tst = df_tst[["amt","is_fraud"]]

tr = TabularDataset(df_tr)

tst = TabularDataset(df_tst)

predictr = TabularPredictor(label="is_fraud", verbosity=1)

t1 = time.time()

predictr.fit(tr)

t2 = time.time()

time_diff = t2 - t1

models = predictr._trainer.model_graph.nodes

results = []

for model_name in models:

# 모델 평가

eval_result = predictr.evaluate(tst, model=model_name)

# 결과를 데이터프레임에 추가

results.append({'model': model_name,

'acc': eval_result['accuracy'],

'pre': eval_result['precision'],

'rec': eval_result['recall'],

'f1': eval_result['f1'],

'auc': eval_result['roc_auc']})

model = []

time_diff = []

acc = []

pre = []

rec = []

f1 = []

auc = []

graph_based = []

method = []

throw_rate = []

train_size = []

train_cols = []

train_frate = []

test_size = []

test_frate = []

hyper_params = []

for result in results:

model_name = result['model']

model.append(model_name)

time_diff.append(None) # 각 모델별로 학습한 시간을 나타내고 싶은데 잘 안됨

acc.append(result['acc'])

pre.append(result['pre'])

rec.append(result['rec'])

f1.append(result['f1'])

auc.append(result['auc'])

graph_based.append(False)

method.append('Autogluon')

throw_rate.append(_df_mean)

train_size.append(len(tr))

train_cols.append([col for col in tr.columns if col != 'is_fraud'])

train_frate.append(tr.is_fraud.mean())

test_size.append(len(tst))

test_frate.append(tst.is_fraud.mean())

hyper_params.append(None)

df_results = pd.DataFrame(dict(

model=model,

time=time_diff,

acc=acc,

pre=pre,

rec=rec,

f1=f1,

auc=auc,

graph_based=graph_based,

method=method,

throw_rate=throw_rate,

train_size=train_size,

train_cols=train_cols,

train_frate=train_frate,

test_size=test_size,

test_frate=test_frate,

hyper_params=hyper_params

))

ymdhms = datetime.datetime.fromtimestamp(time.time()).strftime('%Y%m%d-%H%M%S')

df_results.to_csv(f'../results2/{ymdhms}-Autogluon.csv',index=False)

return df_resultsauto_amt_ver0503(df_train1, df_test, _df1_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_052634/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.994218 | 0.489865 | 0.241532 | 0.323540 | 0.782174 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.993582 | 0.403197 | 0.252082 | 0.310215 | 0.750937 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.765047 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.957828 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.993140 | 0.363844 | 0.264853 | 0.306555 | 0.816185 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.993140 | 0.363844 | 0.264853 | 0.306555 | 0.816185 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.870449 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.993483 | 0.393316 | 0.254858 | 0.309299 | 0.840480 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.993493 | 0.393966 | 0.253748 | 0.308680 | 0.839967 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.943377 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.937504 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.994443 | 0.573003 | 0.115491 | 0.192237 | 0.957382 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.960352 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.994408 | 0.535593 | 0.175458 | 0.264325 | 0.956493 | False | Autogluon | 0.005728 | 734003 | [amt] | 0.005729 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train2, df_test, _df2_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_053531/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.993753 | 0.440837 | 0.339256 | 0.383433 | 0.816442 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.992984 | 0.378443 | 0.350916 | 0.364160 | 0.793232 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.765797 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.957293 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.992393 | 0.341880 | 0.355358 | 0.348489 | 0.840338 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.992393 | 0.341880 | 0.355358 | 0.348489 | 0.840338 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.994253 | 0.496150 | 0.250416 | 0.332841 | 0.936583 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.992866 | 0.371296 | 0.354803 | 0.362862 | 0.860650 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.992911 | 0.373898 | 0.353137 | 0.363221 | 0.858746 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.993804 | 0.435315 | 0.276513 | 0.338200 | 0.929382 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.994202 | 0.487514 | 0.249306 | 0.329904 | 0.939309 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.994510 | 0.566071 | 0.176013 | 0.268530 | 0.952137 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.960304 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.994510 | 0.566071 | 0.176013 | 0.268530 | 0.952137 | False | Autogluon | 0.008171 | 420500 | [amt] | 0.01 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train3, df_test, _df3_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_053949/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.988111 | 0.269002 | 0.626874 | 0.376459 | 0.881531 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.986839 | 0.239242 | 0.595780 | 0.341394 | 0.866643 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.994275 | 0.000000 | 0.000000 | 0.000000 | 0.781026 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.990857 | 0.327004 | 0.564131 | 0.414018 | 0.962248 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.985933 | 0.221444 | 0.579123 | 0.320381 | 0.892199 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.985933 | 0.221444 | 0.579123 | 0.320381 | 0.892199 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.989109 | 0.289889 | 0.622432 | 0.395554 | 0.947419 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.986935 | 0.240853 | 0.595780 | 0.343031 | 0.901395 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.986881 | 0.239937 | 0.595780 | 0.342101 | 0.900649 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.992202 | 0.358630 | 0.459189 | 0.402727 | 0.892828 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.989122 | 0.290080 | 0.621877 | 0.395620 | 0.947978 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.989328 | 0.300205 | 0.649084 | 0.410536 | 0.956575 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.990857 | 0.327004 | 0.564131 | 0.414018 | 0.960620 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.989675 | 0.307937 | 0.644087 | 0.416667 | 0.953609 | False | Autogluon | 0.015065 | 84100 | [amt] | 0.05 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train4, df_test, _df4_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_054133/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.982595 | 0.201106 | 0.686285 | 0.311061 | 0.900398 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.978968 | 0.164834 | 0.657413 | 0.263580 | 0.881212 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.978984 | 0.161268 | 0.635758 | 0.257274 | 0.946402 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.988766 | 0.286629 | 0.646308 | 0.397134 | 0.961948 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.976641 | 0.146733 | 0.639645 | 0.238707 | 0.912443 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.976641 | 0.146733 | 0.639645 | 0.238707 | 0.912443 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.984334 | 0.221946 | 0.692948 | 0.336207 | 0.954685 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.978558 | 0.161833 | 0.656857 | 0.259686 | 0.922153 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.978565 | 0.162062 | 0.657968 | 0.260068 | 0.921562 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.978072 | 0.169241 | 0.724042 | 0.274353 | 0.933527 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.986776 | 0.254322 | 0.677957 | 0.369888 | 0.948753 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.984684 | 0.227363 | 0.698501 | 0.343060 | 0.958205 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.988766 | 0.286629 | 0.646308 | 0.397134 | 0.960951 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.986868 | 0.257897 | 0.689062 | 0.375321 | 0.961334 | False | Autogluon | 0.016841 | 42050 | [amt] | 0.1 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train5, df_test, _df5_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_054246/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.963411 | 0.108540 | 0.747363 | 0.189551 | 0.923442 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.956846 | 0.090896 | 0.726263 | 0.161571 | 0.897693 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.975347 | 0.154720 | 0.740700 | 0.255972 | 0.951622 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.982532 | 0.205704 | 0.716824 | 0.319673 | 0.961749 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.949843 | 0.078371 | 0.721266 | 0.141380 | 0.927522 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.949843 | 0.078371 | 0.721266 | 0.141380 | 0.927522 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.981248 | 0.194635 | 0.725153 | 0.306897 | 0.962530 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.953333 | 0.085378 | 0.736258 | 0.153012 | 0.933086 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.952920 | 0.084616 | 0.735702 | 0.151775 | 0.935070 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.973888 | 0.148217 | 0.750139 | 0.247527 | 0.948571 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.981159 | 0.193917 | 0.725708 | 0.306053 | 0.954487 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.975204 | 0.154952 | 0.747918 | 0.256718 | 0.954467 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.982532 | 0.205704 | 0.716824 | 0.319673 | 0.959817 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.982821 | 0.209389 | 0.720711 | 0.324500 | 0.961966 | False | Autogluon | 0.017896 | 21025 | [amt] | 0.2 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train6, df_test, _df6_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_054324/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.940697 | 0.072537 | 0.794003 | 0.132931 | 0.933847 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.932499 | 0.063441 | 0.784009 | 0.117383 | 0.904577 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.971243 | 0.136405 | 0.754581 | 0.231044 | 0.947721 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.964120 | 0.113510 | 0.773459 | 0.197968 | 0.961942 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.920498 | 0.053932 | 0.779012 | 0.100881 | 0.934945 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.920498 | 0.053932 | 0.779012 | 0.100881 | 0.934945 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.969501 | 0.129434 | 0.755691 | 0.221013 | 0.959693 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.925063 | 0.057803 | 0.790117 | 0.107726 | 0.940287 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.925763 | 0.058505 | 0.792893 | 0.108970 | 0.940250 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.972080 | 0.139732 | 0.751805 | 0.235663 | 0.898952 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.961230 | 0.106459 | 0.780677 | 0.187367 | 0.962270 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.976031 | 0.159245 | 0.744586 | 0.262375 | 0.953301 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.973211 | 0.144070 | 0.744586 | 0.241426 | 0.959773 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.973211 | 0.144070 | 0.744586 | 0.241426 | 0.959773 | False | Autogluon | 0.018278 | 14017 | [amt] | 0.299993 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train7, df_test, _df7_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_054355/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.914633 | 0.053725 | 0.837313 | 0.100971 | 0.939006 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.905713 | 0.048228 | 0.825652 | 0.091132 | 0.911215 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.945599 | 0.080585 | 0.816768 | 0.146697 | 0.955586 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.934616 | 0.069347 | 0.838978 | 0.128105 | 0.961903 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.887406 | 0.040562 | 0.823987 | 0.077318 | 0.938095 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.887406 | 0.040562 | 0.823987 | 0.077318 | 0.938095 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.946769 | 0.082009 | 0.813992 | 0.149006 | 0.958300 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.897276 | 0.045003 | 0.837868 | 0.085418 | 0.944199 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.896577 | 0.044737 | 0.838423 | 0.084941 | 0.943396 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.970783 | 0.134520 | 0.755136 | 0.228360 | 0.901838 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.932667 | 0.067681 | 0.842310 | 0.125294 | 0.961863 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.938733 | 0.072435 | 0.821766 | 0.133135 | 0.955618 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.932311 | 0.067767 | 0.848418 | 0.125508 | 0.960788 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.934616 | 0.069347 | 0.838978 | 0.128105 | 0.961903 | False | Autogluon | 0.018475 | 10512 | [amt] | 0.400019 | 314572 | 0.005725 | None |

auto_amt_ver0503(df_train8, df_test, _df8_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_054431/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.879996 | 0.040024 | 0.868406 | 0.076520 | 0.938083 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.876337 | 0.038557 | 0.860633 | 0.073808 | 0.907000 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.883591 | 0.041820 | 0.882288 | 0.079855 | 0.952322 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.908285 | 0.052093 | 0.873404 | 0.098322 | 0.960795 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.860633 | 0.034338 | 0.860633 | 0.066040 | 0.939192 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.860633 | 0.034338 | 0.860633 | 0.066040 | 0.939192 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.900090 | 0.048243 | 0.878401 | 0.091464 | 0.961486 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.866968 | 0.036225 | 0.868406 | 0.069548 | 0.942842 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.868199 | 0.036466 | 0.866185 | 0.069985 | 0.942820 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.966326 | 0.118535 | 0.758468 | 0.205028 | 0.898449 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.903482 | 0.049752 | 0.876180 | 0.094158 | 0.961121 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.842033 | 0.032196 | 0.915047 | 0.062203 | 0.950496 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.892727 | 0.045191 | 0.881177 | 0.085972 | 0.960270 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.849138 | 0.033570 | 0.912271 | 0.064758 | 0.950978 | False | Autogluon | 0.018595 | 8410 | [amt] | 0.5 | 314572 | 0.005725 | None |

amt 80 미만 잘 잡는지 확인용..

df_80 = df_test[df_test['amt'] <= 80]df_80.shape, df_80.is_fraud.mean()((231011, 22), 0.0016665873053664112)_df1_ = pd.concat([df_train1, df_80])

_df2_ = pd.concat([df_train2, df_80])

_df3_ = pd.concat([df_train3, df_80])

_df4_ = pd.concat([df_train4, df_80])

_df5_ = pd.concat([df_train5, df_80])

_df6_ = pd.concat([df_train6, df_80])

_df7_ = pd.concat([df_train7, df_80])

_df8_ = pd.concat([df_train8, df_80])_df1_mean_ = _df1_.is_fraud.mean()

_df2_mean_ = _df2_.is_fraud.mean()

_df3_mean_ = _df3_.is_fraud.mean()

_df4_mean_ = _df4_.is_fraud.mean()

_df5_mean_ = _df5_.is_fraud.mean()

_df6_mean_ = _df6_.is_fraud.mean()

_df7_mean_ = _df7_.is_fraud.mean()

_df8_mean_ = _df8_.is_fraud.mean()auto_amt_ver0503(df_train1, df_80, _df1_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_094408/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])auto_amt_ver0503(df_train2, df_80, _df2_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_064424/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.537194 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.537194 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.619651 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.885680 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.631076 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.631076 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.854812 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.631076 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.631076 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.746475 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.826658 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.858653 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.885310 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.998333 | 0.0 | 0.0 | 0.0 | 0.858653 | False | Autogluon | 0.007045 | 420500 | [amt] | 0.01 | 231011 | 0.001667 | None |

auto_amt_ver0503(df_train3, df_80, _df3_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_064813/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.997074 | 0.010101 | 0.007792 | 0.008798 | 0.610442 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.996883 | 0.011662 | 0.010390 | 0.010989 | 0.592382 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.667233 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.890164 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.996953 | 0.009231 | 0.007792 | 0.008451 | 0.665573 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.996953 | 0.009231 | 0.007792 | 0.008451 | 0.665573 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.849362 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.997186 | 0.011070 | 0.007792 | 0.009146 | 0.679732 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.997186 | 0.011070 | 0.007792 | 0.009146 | 0.677500 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.593622 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.854089 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.862387 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.885963 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.869034 | False | Autogluon | 0.014566 | 84100 | [amt] | 0.05 | 231011 | 0.001667 | None |

auto_amt_ver0503(df_train4, df_80, _df4_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_065005/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.993780 | 0.006567 | 0.018182 | 0.009649 | 0.663976 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.991113 | 0.008255 | 0.036364 | 0.013455 | 0.618455 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.865731 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.888579 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.989940 | 0.009114 | 0.046753 | 0.015254 | 0.728206 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.989940 | 0.009114 | 0.046753 | 0.015254 | 0.728206 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.877423 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.990723 | 0.008939 | 0.041558 | 0.014713 | 0.758804 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.990710 | 0.008375 | 0.038961 | 0.013787 | 0.754776 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.778624 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.857305 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.875832 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.883690 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.886679 | False | Autogluon | 0.016809 | 42050 | [amt] | 0.1 | 231011 | 0.001667 | None |

auto_amt_ver0503(df_train5, df_80, _df5_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_065116/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.974651 | 0.009679 | 0.140260 | 0.018109 | 0.762008 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.968343 | 0.007955 | 0.145455 | 0.015084 | 0.686752 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.868728 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.888796 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.961876 | 0.008405 | 0.187013 | 0.016088 | 0.790183 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.961876 | 0.008405 | 0.187013 | 0.016088 | 0.790183 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.891512 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.964097 | 0.009063 | 0.189610 | 0.017299 | 0.804425 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.963664 | 0.008831 | 0.187013 | 0.016866 | 0.809713 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.855702 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.863929 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.862611 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.884554 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.889678 | False | Autogluon | 0.018212 | 21025 | [amt] | 0.2 | 231011 | 0.001667 | None |

auto_amt_ver0503(df_train6, df_80, _df6_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_065157/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.949574 | 0.009408 | 0.280519 | 0.018205 | 0.808472 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.942470 | 0.008381 | 0.285714 | 0.016284 | 0.725144 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.860889 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.985395 | 0.014300 | 0.114286 | 0.025419 | 0.890098 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.930609 | 0.007616 | 0.314286 | 0.014872 | 0.818151 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.930609 | 0.007616 | 0.314286 | 0.014872 | 0.818151 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.993758 | 0.010195 | 0.028571 | 0.015027 | 0.883878 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.932977 | 0.008337 | 0.332468 | 0.016265 | 0.830028 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.934016 | 0.008404 | 0.329870 | 0.016390 | 0.831543 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.604243 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.982451 | 0.014811 | 0.145455 | 0.026884 | 0.889313 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.861303 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.989671 | 0.013612 | 0.072727 | 0.022932 | 0.885505 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.989671 | 0.013612 | 0.072727 | 0.022932 | 0.885505 | False | Autogluon | 0.018733 | 14017 | [amt] | 0.299993 | 231011 | 0.001667 | None |

auto_amt_ver0503(df_train7, df_80, _df7_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_065229/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.916411 | 0.008416 | 0.420779 | 0.016502 | 0.828619 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.912039 | 0.007753 | 0.407792 | 0.015218 | 0.762284 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.963513 | 0.013546 | 0.290909 | 0.025887 | 0.876453 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.948557 | 0.012878 | 0.394805 | 0.024943 | 0.889180 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.893364 | 0.006753 | 0.431169 | 0.013298 | 0.833487 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.893364 | 0.006753 | 0.431169 | 0.013298 | 0.833487 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.965032 | 0.013532 | 0.277922 | 0.025808 | 0.880542 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.901057 | 0.007668 | 0.454545 | 0.015082 | 0.846933 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.900381 | 0.007702 | 0.459740 | 0.015150 | 0.843639 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.604243 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.945548 | 0.012786 | 0.415584 | 0.024808 | 0.886951 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.950418 | 0.011561 | 0.340260 | 0.022363 | 0.868049 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.945418 | 0.013453 | 0.438961 | 0.026106 | 0.887662 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.948557 | 0.012878 | 0.394805 | 0.024943 | 0.889180 | False | Autogluon | 0.019004 | 10512 | [amt] | 0.400019 | 231011 | 0.001667 | None |

auto_amt_ver0503(df_train8, df_80, _df8_mean_)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_065302/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.876305 | 0.007306 | 0.542857 | 0.014417 | 0.835443 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 1 | KNeighborsDist | None | 0.879227 | 0.007165 | 0.519481 | 0.014134 | 0.761894 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 2 | LightGBMXT | None | 0.878248 | 0.008225 | 0.602597 | 0.016229 | 0.861540 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 3 | LightGBM | None | 0.916974 | 0.010827 | 0.540260 | 0.021229 | 0.887029 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 4 | RandomForestGini | None | 0.864556 | 0.006673 | 0.542857 | 0.013183 | 0.837880 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 5 | RandomForestEntr | None | 0.864556 | 0.006673 | 0.542857 | 0.013183 | 0.837880 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 6 | CatBoost | None | 0.900442 | 0.009840 | 0.589610 | 0.019358 | 0.888977 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 7 | ExtraTreesGini | None | 0.868123 | 0.007015 | 0.555844 | 0.013854 | 0.841006 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 8 | ExtraTreesEntr | None | 0.869738 | 0.007003 | 0.548052 | 0.013830 | 0.842724 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 9 | NeuralNetFastAI | None | 0.998333 | 0.000000 | 0.000000 | 0.000000 | 0.604243 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 10 | XGBoost | None | 0.907489 | 0.010131 | 0.563636 | 0.019904 | 0.887128 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 11 | NeuralNetTorch | None | 0.842518 | 0.007579 | 0.719481 | 0.015000 | 0.859309 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 12 | LightGBMLarge | None | 0.890689 | 0.009081 | 0.597403 | 0.017890 | 0.882991 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

| 13 | WeightedEnsemble_L2 | None | 0.848488 | 0.007736 | 0.706494 | 0.015305 | 0.861127 | False | Autogluon | 0.019171 | 8410 | [amt] | 0.5 | 231011 | 0.001667 | None |

다른 것 추가

def auto_amt_ver0520(df_tr, df_tst, _df_mean):

df_tr = df_tr[['trans_date_trans_time', 'merchant', 'category', 'amt',

'first', 'last', 'gender', 'street', 'city', 'state', 'zip', 'lat',

'long', 'city_pop', 'job', 'dob', 'trans_num', 'unix_time', 'merch_lat',

'merch_long', 'is_fraud']]

df_tst = df_tst[['trans_date_trans_time', 'merchant', 'category', 'amt',

'first', 'last', 'gender', 'street', 'city', 'state', 'zip', 'lat',

'long', 'city_pop', 'job', 'dob', 'trans_num', 'unix_time', 'merch_lat',

'merch_long', 'is_fraud']]

tr = TabularDataset(df_tr)

tst = TabularDataset(df_tst)

predictr = TabularPredictor(label="is_fraud", verbosity=1)

t1 = time.time()

predictr.fit(tr)

t2 = time.time()

time_diff = t2 - t1

models = predictr._trainer.model_graph.nodes

results = []

for model_name in models:

# 모델 평가

eval_result = predictr.evaluate(tst, model=model_name)

# 결과를 데이터프레임에 추가

results.append({'model': model_name,

'acc': eval_result['accuracy'],

'pre': eval_result['precision'],

'rec': eval_result['recall'],

'f1': eval_result['f1'],

'auc': eval_result['roc_auc']})

model = []

time_diff = []

acc = []

pre = []

rec = []

f1 = []

auc = []

graph_based = []

method = []

throw_rate = []

train_size = []

train_cols = []

train_frate = []

test_size = []

test_frate = []

hyper_params = []

for result in results:

model_name = result['model']

model.append(model_name)

time_diff.append(None) # 각 모델별로 학습한 시간을 나타내고 싶은데 잘 안됨

acc.append(result['acc'])

pre.append(result['pre'])

rec.append(result['rec'])

f1.append(result['f1'])

auc.append(result['auc'])

graph_based.append(False)

method.append('Autogluon')

throw_rate.append(_df_mean)

train_size.append(len(tr))

train_cols.append([col for col in tr.columns if col != 'is_fraud'])

train_frate.append(tr.is_fraud.mean())

test_size.append(len(tst))

test_frate.append(tst.is_fraud.mean())

hyper_params.append(None)

df_results = pd.DataFrame(dict(

model=model,

time=time_diff,

acc=acc,

pre=pre,

rec=rec,

f1=f1,

auc=auc,

graph_based=graph_based,

method=method,

throw_rate=throw_rate,

train_size=train_size,

train_cols=train_cols,

train_frate=train_frate,

test_size=test_size,

test_frate=test_frate,

hyper_params=hyper_params

))

ymdhms = datetime.datetime.fromtimestamp(time.time()).strftime('%Y%m%d-%H%M%S')

df_results.to_csv(f'../results2/{ymdhms}-Autogluon.csv',index=False)

return df_resultsauto_amt_ver0520(df_train1, df_test, _df1_mean)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_094915/"

AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])| model | time | acc | pre | rec | f1 | auc | graph_based | method | throw_rate | train_size | train_cols | train_frate | test_size | test_frate | hyper_params | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | KNeighborsUnif | None | 0.997854 | 0.774099 | 0.882843 | 0.824903 | 0.996391 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 1 | KNeighborsDist | None | 0.998760 | 0.850472 | 0.950583 | 0.897745 | 0.996693 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 2 | LightGBMXT | None | 0.997641 | 0.940150 | 0.627984 | 0.752996 | 0.995792 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 3 | LightGBM | None | 0.997266 | 0.838246 | 0.647418 | 0.730576 | 0.992670 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 4 | RandomForestGini | None | 0.997965 | 0.955294 | 0.676291 | 0.791938 | 0.998141 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 5 | RandomForestEntr | None | 0.998163 | 0.978857 | 0.694059 | 0.812216 | 0.998040 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 6 | CatBoost | None | 0.998404 | 0.940937 | 0.769572 | 0.846671 | 0.983846 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 7 | ExtraTreesGini | None | 0.997603 | 0.995270 | 0.584120 | 0.736179 | 0.999165 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 8 | ExtraTreesEntr | None | 0.997597 | 0.994324 | 0.583565 | 0.735479 | 0.999239 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 9 | NeuralNetFastAI | None | 0.998992 | 0.944311 | 0.875625 | 0.908672 | 0.997173 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 10 | XGBoost | None | 0.997253 | 0.840727 | 0.641866 | 0.727960 | 0.980501 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 11 | NeuralNetTorch | None | 0.997619 | 0.865786 | 0.691283 | 0.768756 | 0.993116 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 12 | LightGBMLarge | None | 0.997543 | 0.858939 | 0.682954 | 0.760903 | 0.990375 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

| 13 | WeightedEnsemble_L2 | None | 0.999399 | 0.975797 | 0.917823 | 0.945923 | 0.999333 | False | Autogluon | 0.005728 | 734003 | [trans_date_trans_time, merchant, category, amt, first, last, gender, street, city, state, zip, lat, long, city_pop, job, dob, trans_num, unix_time, merch_lat, merch_long] | 0.005729 | 314572 | 0.005725 | None |

Xcolumns = ['category', 'amt','gender', 'street', 'city', 'state', 'zip', 'lat', 'long', 'city_pop', 'job', 'unix_time', 'is_fraud']

df_tr = df_train1[Xcolumns]

df_tst = df_test[Xcolumns]tr = TabularDataset(df_tr)

tst = TabularDataset(df_tst)

predictr = TabularPredictor(label="is_fraud", verbosity=1)No path specified. Models will be saved in: "AutogluonModels/ag-20240520_103239/"predictr.fit(tr)AutoGluon infers your prediction problem is: 'binary' (because only two unique label-values observed).

If 'binary' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])<autogluon.tabular.predictor.predictor.TabularPredictor at 0x7f6099bb3400>yyhat_prob = predictr.predict_proba(tst).iloc[:,-1]df_tst_compact=tst.assign(yyhat_prob = yyhat_prob).loc[:,['amt','is_fraud','yyhat_prob']]df_tst_compact[df_tst_compact.amt<80]| amt | is_fraud | yyhat_prob | |

|---|---|---|---|

| 0 | 7.53 | 0 | 0.001258 |

| 1 | 3.79 | 0 | 0.001710 |

| 2 | 59.07 | 0 | 0.002354 |

| 3 | 25.58 | 0 | 0.000962 |

| 5 | 20.59 | 0 | 0.004030 |

| ... | ... | ... | ... |

| 314538 | 19.68 | 1 | 0.005087 |

| 314558 | 12.43 | 1 | 0.064472 |

| 314563 | 20.51 | 1 | 0.003546 |

| 314566 | 17.83 | 1 | 0.003011 |

| 314571 | 12.57 | 1 | 0.995636 |

230999 rows × 3 columns

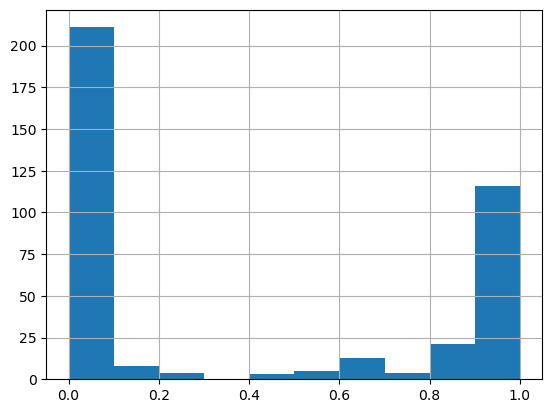

df_tst_compact[

(df_tst_compact.amt<80) & (df_tst_compact.is_fraud==1)

].yyhat_prob.hist()

sklearn.metrics.f1_score(

df_tst_compact[df_tst_compact.amt<80].is_fraud,

df_tst_compact[df_tst_compact.amt<80].yyhat_prob>0.5

)0.5813528336380257sklearn.metrics.recall_score(

df_tst_compact[df_tst_compact.amt<80].is_fraud,

df_tst_compact[df_tst_compact.amt<80].yyhat_prob>0.5

)0.412987012987013sklearn.metrics.precision_score(

df_tst_compact[df_tst_compact.amt<80].is_fraud,

df_tst_compact[df_tst_compact.amt<80].yyhat_prob>0.5

)0.9814814814814815