#!pip install autogluon.eda13wk-57: House Prices / 자료분석(Autogluon)

최규빈

2023-12-01

1. 강의영상

https://youtu.be/playlist?list=PLQqh36zP38-x-PYcds3K7ck8ELQyVlVoN&si=ZCdvUB2r4dQ7cnQx

2. Imports

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

#---#

from autogluon.tabular import TabularPredictor

import autogluon.eda.auto as auto

#---#

import warnings

warnings.filterwarnings('ignore')3. Data

ref: https://www.kaggle.com/competitions/house-prices-advanced-regression-techniques/overview

!kaggle competitions download -c house-prices-advanced-regression-techniquesWarning: Your Kaggle API key is readable by other users on this system! To fix this, you can run 'chmod 600 /home/coco/.kaggle/kaggle.json'

Downloading house-prices-advanced-regression-techniques.zip to /home/coco/Dropbox/Class/STBDA23/posts

100%|█████████████████████████████████████████| 199k/199k [00:00<00:00, 436kB/s]

100%|█████████████████████████████████████████| 199k/199k [00:00<00:00, 435kB/s]!unzip house-prices-advanced-regression-techniques.zipArchive: house-prices-advanced-regression-techniques.zip

inflating: data_description.txt

inflating: sample_submission.csv

inflating: test.csv

inflating: train.csv df_submission = pd.read_csv("sample_submission.csv")

df_train = pd.read_csv("train.csv")

df_test = pd.read_csv("test.csv")!rm sample_submission.csv

!rm train.csv

!rm test.csv

!rm data_description.txt

!rm house-prices-advanced-regression-techniques.zip4. 적합

set(df_train.columns) - set(df_test.columns){'SalePrice'}# step1 -- pass

# step2

predictr = TabularPredictor(label='SalePrice')

# step3

predictr.fit(df_train)

# step4

yhat = predictr.predict(df_train)

yyhat = predictr.predict(df_test)No path specified. Models will be saved in: "AutogluonModels/ag-20231210_084023/"

Beginning AutoGluon training ...

AutoGluon will save models to "AutogluonModels/ag-20231210_084023/"

AutoGluon Version: 0.8.2

Python Version: 3.8.18

Operating System: Linux

Platform Machine: x86_64

Platform Version: #38~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Thu Nov 2 18:01:13 UTC 2

Disk Space Avail: 643.98 GB / 982.82 GB (65.5%)

Train Data Rows: 1460

Train Data Columns: 80

Label Column: SalePrice

Preprocessing data ...

AutoGluon infers your prediction problem is: 'regression' (because dtype of label-column == int and many unique label-values observed).

Label info (max, min, mean, stddev): (755000, 34900, 180921.19589, 79442.50288)

If 'regression' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 128242.01 MB

Train Data (Original) Memory Usage: 4.06 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Note: Converting 3 features to boolean dtype as they only contain 2 unique values.

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Fitting CategoryFeatureGenerator...

Fitting CategoryMemoryMinimizeFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('float', []) : 3 | ['LotFrontage', 'MasVnrArea', 'GarageYrBlt']

('int', []) : 34 | ['Id', 'MSSubClass', 'LotArea', 'OverallQual', 'OverallCond', ...]

('object', []) : 43 | ['MSZoning', 'Street', 'Alley', 'LotShape', 'LandContour', ...]

Types of features in processed data (raw dtype, special dtypes):

('category', []) : 40 | ['MSZoning', 'Alley', 'LotShape', 'LandContour', 'LotConfig', ...]

('float', []) : 3 | ['LotFrontage', 'MasVnrArea', 'GarageYrBlt']

('int', []) : 34 | ['Id', 'MSSubClass', 'LotArea', 'OverallQual', 'OverallCond', ...]

('int', ['bool']) : 3 | ['Street', 'Utilities', 'CentralAir']

0.2s = Fit runtime

80 features in original data used to generate 80 features in processed data.

Train Data (Processed) Memory Usage: 0.52 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.18s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

Automatically generating train/validation split with holdout_frac=0.2, Train Rows: 1168, Val Rows: 292

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'KNN': [{'weights': 'uniform', 'ag_args': {'name_suffix': 'Unif'}}, {'weights': 'distance', 'ag_args': {'name_suffix': 'Dist'}}],

}

Fitting 11 L1 models ...

Fitting model: KNeighborsUnif ...

Exception ignored on calling ctypes callback function: <function _ThreadpoolInfo._find_modules_with_dl_iterate_phdr.<locals>.match_module_callback at 0x7f2e74356550>

Traceback (most recent call last):

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 400, in match_module_callback

self._make_module_from_path(filepath)

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 515, in _make_module_from_path

module = module_class(filepath, prefix, user_api, internal_api)

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 606, in __init__

self.version = self.get_version()

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 646, in get_version

config = get_config().split()

AttributeError: 'NoneType' object has no attribute 'split'

-52278.8213 = Validation score (-root_mean_squared_error)

0.2s = Training runtime

0.04s = Validation runtime

Fitting model: KNeighborsDist ...

Exception ignored on calling ctypes callback function: <function _ThreadpoolInfo._find_modules_with_dl_iterate_phdr.<locals>.match_module_callback at 0x7f2e4f9dd670>

Traceback (most recent call last):

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 400, in match_module_callback

self._make_module_from_path(filepath)

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 515, in _make_module_from_path

module = module_class(filepath, prefix, user_api, internal_api)

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 606, in __init__

self.version = self.get_version()

File "/home/coco/anaconda3/envs/py38/lib/python3.8/site-packages/threadpoolctl.py", line 646, in get_version

config = get_config().split()

AttributeError: 'NoneType' object has no attribute 'split'

-51314.2734 = Validation score (-root_mean_squared_error)

0.03s = Training runtime

0.01s = Validation runtime

Fitting model: LightGBMXT ...

-27196.7065 = Validation score (-root_mean_squared_error)

2.18s = Training runtime

0.02s = Validation runtime

Fitting model: LightGBM ...

-28692.2871 = Validation score (-root_mean_squared_error)

5.13s = Training runtime

0.05s = Validation runtime

Fitting model: RandomForestMSE ...

-32785.3519 = Validation score (-root_mean_squared_error)

0.37s = Training runtime

0.02s = Validation runtime

Fitting model: CatBoost ...

-28465.6966 = Validation score (-root_mean_squared_error)

43.66s = Training runtime

0.01s = Validation runtime

Fitting model: ExtraTreesMSE ...

-32045.9062 = Validation score (-root_mean_squared_error)

0.3s = Training runtime

0.02s = Validation runtime

Fitting model: NeuralNetFastAI ...

-33846.1211 = Validation score (-root_mean_squared_error)

1.29s = Training runtime

0.02s = Validation runtime

Fitting model: XGBoost ...

-27778.2437 = Validation score (-root_mean_squared_error)

0.87s = Training runtime

0.01s = Validation runtime

Fitting model: NeuralNetTorch ...

-36076.0341 = Validation score (-root_mean_squared_error)

2.24s = Training runtime

0.02s = Validation runtime

Fitting model: LightGBMLarge ...

-32084.1712 = Validation score (-root_mean_squared_error)

7.97s = Training runtime

0.04s = Validation runtime

Fitting model: WeightedEnsemble_L2 ...

-26322.571 = Validation score (-root_mean_squared_error)

0.16s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 65.86s ... Best model: "WeightedEnsemble_L2"

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20231210_084023/")

WARNING: Int features without null values at train time contain null values at inference time! Imputing nulls to 0. To avoid this, pass the features as floats during fit!

WARNING: Int features with nulls: ['BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', 'BsmtFullBath', 'BsmtHalfBath', 'GarageCars', 'GarageArea'][1000] valid_set's rmse: 27505.1

[2000] valid_set's rmse: 27240.4

[3000] valid_set's rmse: 27201.5

[4000] valid_set's rmse: 27197.3

[5000] valid_set's rmse: 27197.2

[1000] valid_set's rmse: 29499.8

[2000] valid_set's rmse: 28896.4

[3000] valid_set's rmse: 28752.1

[4000] valid_set's rmse: 28705.7

[5000] valid_set's rmse: 28695.2

[6000] valid_set's rmse: 28693

[7000] valid_set's rmse: 28692.5

[8000] valid_set's rmse: 28692.3

[9000] valid_set's rmse: 28692.3

[10000] valid_set's rmse: 28692.3

[1000] valid_set's rmse: 32134.9

[2000] valid_set's rmse: 32087.8

[3000] valid_set's rmse: 32084.2

[4000] valid_set's rmse: 32084.2

[5000] valid_set's rmse: 32084.25. 제출

df_submission['SalePrice'] = yyhat

df_submission.to_csv("submission.csv",index=False)!kaggle competitions submit -c house-prices-advanced-regression-techniques -f submission.csv -m "오토글루온을 이용하여 첫제출"

!rm submission.csvWarning: Your Kaggle API key is readable by other users on this system! To fix this, you can run 'chmod 600 /home/coco/.kaggle/kaggle.json'

100%|██████████████████████████████████████| 21.1k/21.1k [00:01<00:00, 11.4kB/s]

Successfully submitted to House Prices - Advanced Regression Techniques

958/49550.19334006054490413나쁘지 않은 순위..

6. 해석 및 시각화 (HW)

- 변수들중에서 SalePrice를 예측하기에 적절한 변수들을 조사해볼것.

df_train| Id | MSSubClass | MSZoning | LotFrontage | LotArea | Street | Alley | LotShape | LandContour | Utilities | ... | PoolArea | PoolQC | Fence | MiscFeature | MiscVal | MoSold | YrSold | SaleType | SaleCondition | SalePrice | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 60 | RL | 65.0 | 8450 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 2 | 2008 | WD | Normal | 208500 |

| 1 | 2 | 20 | RL | 80.0 | 9600 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 5 | 2007 | WD | Normal | 181500 |

| 2 | 3 | 60 | RL | 68.0 | 11250 | Pave | NaN | IR1 | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 9 | 2008 | WD | Normal | 223500 |

| 3 | 4 | 70 | RL | 60.0 | 9550 | Pave | NaN | IR1 | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 2 | 2006 | WD | Abnorml | 140000 |

| 4 | 5 | 60 | RL | 84.0 | 14260 | Pave | NaN | IR1 | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 12 | 2008 | WD | Normal | 250000 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1455 | 1456 | 60 | RL | 62.0 | 7917 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 8 | 2007 | WD | Normal | 175000 |

| 1456 | 1457 | 20 | RL | 85.0 | 13175 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | MnPrv | NaN | 0 | 2 | 2010 | WD | Normal | 210000 |

| 1457 | 1458 | 70 | RL | 66.0 | 9042 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | GdPrv | Shed | 2500 | 5 | 2010 | WD | Normal | 266500 |

| 1458 | 1459 | 20 | RL | 68.0 | 9717 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 4 | 2010 | WD | Normal | 142125 |

| 1459 | 1460 | 20 | RL | 75.0 | 9937 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 6 | 2008 | WD | Normal | 147500 |

1460 rows × 81 columns

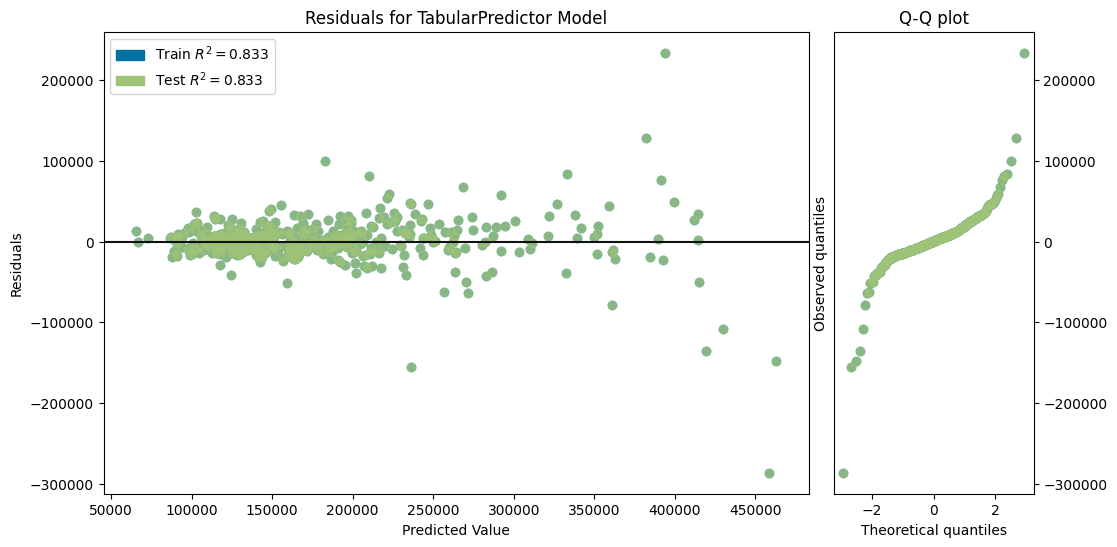

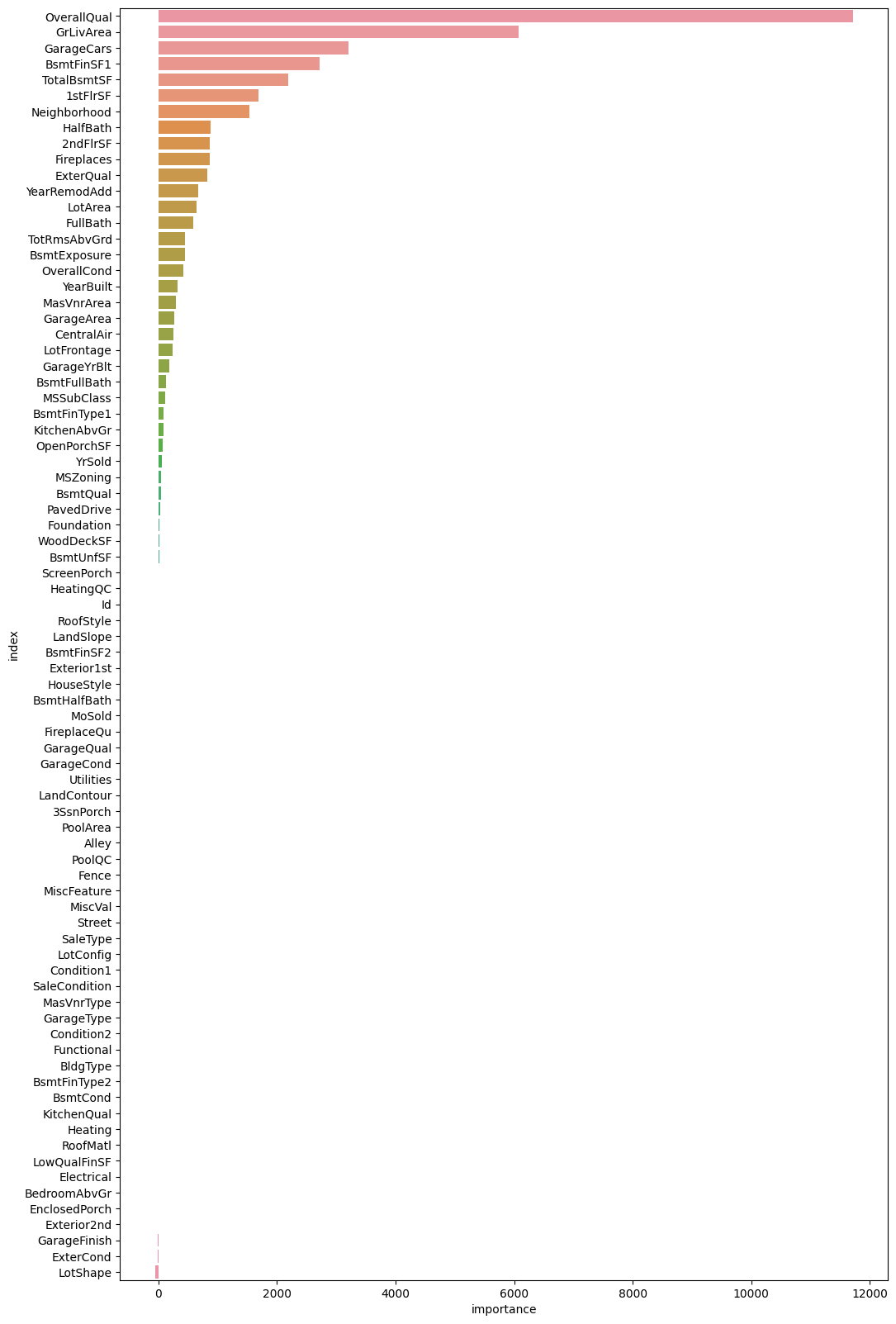

auto.quick_fit(

train_data=df_train,

label='SalePrice',

show_feature_importance_barplots=True

)No path specified. Models will be saved in: "AutogluonModels/ag-20231210_084134/"Model Prediction for SalePrice

Using validation data for Test points

Model Leaderboard

| model | score_test | score_val | pred_time_test | pred_time_val | fit_time | pred_time_test_marginal | pred_time_val_marginal | fit_time_marginal | stack_level | can_infer | fit_order | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | LightGBMXT | -30529.412291 | -32535.182194 | 0.008621 | 0.006892 | 0.269097 | 0.008621 | 0.006892 | 0.269097 | 1 | True | 1 |

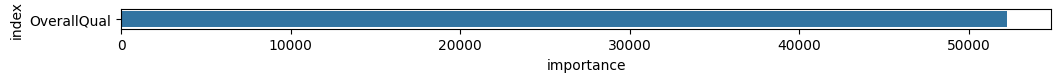

Feature Importance for Trained Model

| importance | stddev | p_value | n | p99_high | p99_low | |

|---|---|---|---|---|---|---|

| OverallQual | 11716.915326 | 705.955990 | 1.573763e-06 | 5 | 13170.488468 | 10263.342185 |

| GrLivArea | 6071.089919 | 394.407430 | 2.125470e-06 | 5 | 6883.180269 | 5258.999569 |

| GarageCars | 3209.379857 | 372.418575 | 2.137296e-05 | 5 | 3976.194851 | 2442.564863 |

| BsmtFinSF1 | 2719.389615 | 125.895615 | 5.496647e-07 | 5 | 2978.610426 | 2460.168805 |

| TotalBsmtSF | 2187.752068 | 328.342663 | 5.909712e-05 | 5 | 2863.814149 | 1511.689987 |

| 1stFlrSF | 1688.228494 | 248.951746 | 5.513520e-05 | 5 | 2200.823579 | 1175.633408 |

| Neighborhood | 1534.658374 | 377.814804 | 4.073284e-04 | 5 | 2312.584278 | 756.732471 |

| HalfBath | 881.236830 | 353.675558 | 2.542584e-03 | 5 | 1609.459692 | 153.013968 |

| 2ndFlrSF | 872.631138 | 123.229525 | 4.647906e-05 | 5 | 1126.362433 | 618.899844 |

| Fireplaces | 868.173697 | 364.469844 | 2.989959e-03 | 5 | 1618.622144 | 117.725249 |

| ExterQual | 829.910645 | 401.498301 | 4.933027e-03 | 5 | 1656.601195 | 3.220095 |

| YearRemodAdd | 675.119616 | 166.871475 | 4.136303e-04 | 5 | 1018.710290 | 331.528942 |

| LotArea | 645.731150 | 240.401151 | 1.933927e-03 | 5 | 1140.720443 | 150.741856 |

| FullBath | 587.941341 | 102.476487 | 1.064026e-04 | 5 | 798.941844 | 376.940838 |

| TotRmsAbvGrd | 455.362588 | 146.798615 | 1.134317e-03 | 5 | 757.622965 | 153.102211 |

| BsmtExposure | 452.574670 | 100.266016 | 2.711034e-04 | 5 | 659.023783 | 246.125556 |

| OverallCond | 415.878748 | 101.116481 | 3.882599e-04 | 5 | 624.078980 | 207.678516 |

| YearBuilt | 328.365122 | 49.414644 | 5.972756e-05 | 5 | 430.110557 | 226.619687 |

| MasVnrArea | 291.987049 | 102.297731 | 1.546173e-03 | 5 | 502.619491 | 81.354608 |

| GarageArea | 266.571657 | 194.368541 | 1.870635e-02 | 5 | 666.779168 | -133.635855 |

| CentralAir | 252.005310 | 43.263826 | 1.002687e-04 | 5 | 341.086127 | 162.924494 |

| LotFrontage | 234.476025 | 232.700363 | 4.367093e-02 | 5 | 713.609289 | -244.657239 |

| GarageYrBlt | 179.569936 | 41.348994 | 3.147824e-04 | 5 | 264.708087 | 94.431785 |

| BsmtFullBath | 130.488441 | 27.317412 | 2.176156e-04 | 5 | 186.735370 | 74.241513 |

| MSSubClass | 115.302650 | 77.955090 | 1.486407e-02 | 5 | 275.813256 | -45.207957 |

| BsmtFinType1 | 89.671801 | 23.805159 | 5.438720e-04 | 5 | 138.686952 | 40.656649 |

| KitchenAbvGr | 88.796843 | 13.144665 | 5.597648e-05 | 5 | 115.861890 | 61.731796 |

| OpenPorchSF | 76.599171 | 32.768044 | 3.198232e-03 | 5 | 144.069027 | 9.129315 |

| YrSold | 55.041099 | 17.204734 | 1.010331e-03 | 5 | 90.465884 | 19.616315 |

| MSZoning | 52.239352 | 30.345403 | 9.156204e-03 | 5 | 114.720955 | -10.242251 |

| BsmtQual | 45.470810 | 50.360612 | 5.681646e-02 | 5 | 149.164006 | -58.222385 |

| PavedDrive | 27.952965 | 11.965434 | 3.205450e-03 | 5 | 52.589960 | 3.315970 |

| Foundation | 15.764567 | 21.136187 | 8.534210e-02 | 5 | 59.284268 | -27.755134 |

| WoodDeckSF | 14.490268 | 49.028633 | 2.724108e-01 | 5 | 115.440900 | -86.460364 |

| BsmtUnfSF | 14.251367 | 14.062238 | 4.304693e-02 | 5 | 43.205709 | -14.702976 |

| ScreenPorch | 10.514393 | 6.927148 | 1.371401e-02 | 5 | 24.777486 | -3.748700 |

| HeatingQC | 10.365567 | 6.499702 | 1.172951e-02 | 5 | 23.748544 | -3.017409 |

| Id | 9.875743 | 25.538459 | 2.179917e-01 | 5 | 62.459783 | -42.708298 |

| RoofStyle | 8.089551 | 53.663840 | 3.765020e-01 | 5 | 118.584139 | -102.405037 |

| LandSlope | 6.869875 | 7.000689 | 4.662255e-02 | 5 | 21.284391 | -7.544641 |

| BsmtFinSF2 | 6.856908 | 2.939085 | 3.220858e-03 | 5 | 12.908525 | 0.805292 |

| Exterior1st | 4.492434 | 8.697021 | 1.561884e-01 | 5 | 22.399720 | -13.414852 |

| HouseStyle | 3.571663 | 3.437669 | 4.042508e-02 | 5 | 10.649870 | -3.506545 |

| BsmtHalfBath | 2.760108 | 1.020890 | 1.888221e-03 | 5 | 4.862135 | 0.658082 |

| MoSold | 1.776995 | 18.530416 | 4.203498e-01 | 5 | 39.931378 | -36.377389 |

| FireplaceQu | 0.246895 | 8.880015 | 4.767049e-01 | 5 | 18.530969 | -18.037180 |

| GarageQual | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| GarageCond | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Utilities | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| LandContour | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| 3SsnPorch | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| PoolArea | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Alley | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| PoolQC | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Fence | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| MiscFeature | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| MiscVal | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Street | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| SaleType | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| LotConfig | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Condition1 | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| SaleCondition | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| MasVnrType | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| GarageType | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Condition2 | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Functional | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| BldgType | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| BsmtFinType2 | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| BsmtCond | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| KitchenQual | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Heating | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| RoofMatl | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| LowQualFinSF | 0.000000 | 0.000000 | 5.000000e-01 | 5 | 0.000000 | 0.000000 |

| Electrical | -0.249231 | 0.232446 | 9.627220e-01 | 5 | 0.229380 | -0.727841 |

| BedroomAbvGr | -0.281759 | 5.209289 | 5.452164e-01 | 5 | 10.444239 | -11.007758 |

| EnclosedPorch | -0.726761 | 1.153234 | 8.842100e-01 | 5 | 1.647763 | -3.101286 |

| Exterior2nd | -1.300222 | 2.040234 | 8.863621e-01 | 5 | 2.900648 | -5.501092 |

| GarageFinish | -4.409206 | 10.141071 | 8.070016e-01 | 5 | 16.471400 | -25.289812 |

| ExterCond | -4.973063 | 0.837647 | 9.999070e-01 | 5 | -3.248336 | -6.697791 |

| LotShape | -56.043807 | 64.453373 | 9.381189e-01 | 5 | 76.666578 | -188.754193 |

Rows with the highest prediction error

Rows in this category worth inspecting for the causes of the error

| Id | MSSubClass | MSZoning | LotFrontage | LotArea | Street | Alley | LotShape | LandContour | Utilities | ... | Fence | MiscFeature | MiscVal | MoSold | YrSold | SaleType | SaleCondition | SalePrice | SalePrice_pred | error | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1182 | 1183 | 60 | RL | 160.0 | 15623 | Pave | NaN | IR1 | Lvl | AllPub | ... | MnPrv | NaN | 0 | 7 | 2007 | WD | Abnorml | 745000 | 458492.125000 | 286507.875000 |

| 1298 | 1299 | 60 | RL | 313.0 | 63887 | Pave | NaN | IR3 | Bnk | AllPub | ... | NaN | NaN | 0 | 1 | 2008 | New | Partial | 160000 | 394141.625000 | 234141.625000 |

| 688 | 689 | 20 | RL | 60.0 | 8089 | Pave | NaN | Reg | HLS | AllPub | ... | NaN | NaN | 0 | 10 | 2007 | New | Partial | 392000 | 236151.000000 | 155849.000000 |

| 898 | 899 | 20 | RL | 100.0 | 12919 | Pave | NaN | IR1 | Lvl | AllPub | ... | NaN | NaN | 0 | 3 | 2010 | New | Partial | 611657 | 463175.906250 | 148481.093750 |

| 440 | 441 | 20 | RL | 105.0 | 15431 | Pave | NaN | Reg | Lvl | AllPub | ... | NaN | NaN | 0 | 4 | 2009 | WD | Normal | 555000 | 419354.593750 | 135645.406250 |

| 581 | 582 | 20 | RL | 98.0 | 12704 | Pave | NaN | Reg | Lvl | AllPub | ... | NaN | NaN | 0 | 8 | 2009 | New | Partial | 253293 | 382104.968750 | 128811.968750 |

| 769 | 770 | 60 | RL | 47.0 | 53504 | Pave | NaN | IR2 | HLS | AllPub | ... | NaN | NaN | 0 | 6 | 2010 | WD | Normal | 538000 | 429698.281250 | 108301.718750 |

| 632 | 633 | 20 | RL | 85.0 | 11900 | Pave | NaN | Reg | Lvl | AllPub | ... | NaN | NaN | 0 | 4 | 2009 | WD | Family | 82500 | 182936.734375 | 100436.734375 |

| 4 | 5 | 60 | RL | 84.0 | 14260 | Pave | NaN | IR1 | Lvl | AllPub | ... | NaN | NaN | 0 | 12 | 2008 | WD | Normal | 250000 | 333203.062500 | 83203.062500 |

| 666 | 667 | 60 | RL | NaN | 18450 | Pave | NaN | IR1 | Lvl | AllPub | ... | NaN | NaN | 0 | 8 | 2007 | WD | Abnorml | 129000 | 210298.609375 | 81298.609375 |

10 rows × 83 columns

df_train.iloc[[1]]| Id | MSSubClass | MSZoning | LotFrontage | LotArea | Street | Alley | LotShape | LandContour | Utilities | ... | PoolArea | PoolQC | Fence | MiscFeature | MiscVal | MoSold | YrSold | SaleType | SaleCondition | SalePrice | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 20 | RL | 80.0 | 9600 | Pave | NaN | Reg | Lvl | AllPub | ... | 0 | NaN | NaN | NaN | 0 | 5 | 2007 | WD | Normal | 181500 |

1 rows × 81 columns

predictr.predict(df_train.iloc[[1]])1 170013.546875

Name: SalePrice, dtype: float32# auto.explain_rows(

# train_data=df_train,

# model=predictr,

# rows=df_train.iloc[[1]],

# display_rows=True,

# plot='waterfall'

# )- 위 코드 돌아가는데 너무 오래걸리네

chatGPT의 변수에 대한 설명

이 데이터는 Kaggle의 “House Prices - Advanced Regression Techniques” 대회에서 사용되는 데이터로, 주택 판매 가격을 예측하는 문제입니다. 아래는 데이터에 있는 변수들에 대한 설명입니다.

SalePrice (판매 가격): 주택의 판매 가격 (예측하려는 대상 변수)

MSSubClass (건물 종류): 건물의 종류를 나타내는 코드

MSZoning (일반 분류): 일반적인 구역 분류

LotFrontage (부동산에 연결된 도로의 선형 길이): 주택과 연결된 도로의 길이

LotArea (부지 면적): 부지의 면적 (제곱 피트)

Street (도로 접근 유형): 도로 접근 유형 (포장 도로 등)

Alley (골목 접근 유형): 골목 접근 유형

LotShape (부지의 일반적인 모양): 부지의 모양

LandContour (부지의 평평함): 부지의 평평함 정도

Utilities (사용 가능한 유틸리티 유형): 사용 가능한 유틸리티의 유형

LotConfig (부지 구성): 부지의 구성

LandSlope (부지 경사): 부지의 경사

Neighborhood (Ames 시 한계 내의 물리적 위치): 물리적 위치

Condition1 (주요 도로 또는 철도와의 근접성): 주요 도로 또는 철도와의 근접성

Condition2 (주요 도로 또는 철도와의 근접성(두 번째)): 두 번째로 주어진 경우의 주요 도로 또는 철도와의 근접성

BldgType (주거 형태): 주거 형태

HouseStyle (주택 스타일): 주택 스타일

OverallQual (전체 자재 및 마감 품질): 전반적인 자재 및 마감 품질

OverallCond (전반적인 상태 등급): 전반적인 상태 등급

YearBuilt (원래 건설 날짜): 원래 건설된 연도

… 그 외에도 다양한 주택 특징 및 설명이 포함되어 있습니다.

이 변수들은 각각 주택에 대한 다양한 특징을 설명하고 있으며, 이러한 특징을 사용하여 각 주택의 판매 가격을 예측하는 것이 목표입니다.

- 위 데이터에서

SalePrice을 예측하는데 중요한 변수로는OverallQual이 가장 중요하다.

set(df_train['OverallQual']){1, 2, 3, 4, 5, 6, 7, 8, 9, 10}등급이 높을 수록 saleprice가 더 높다.

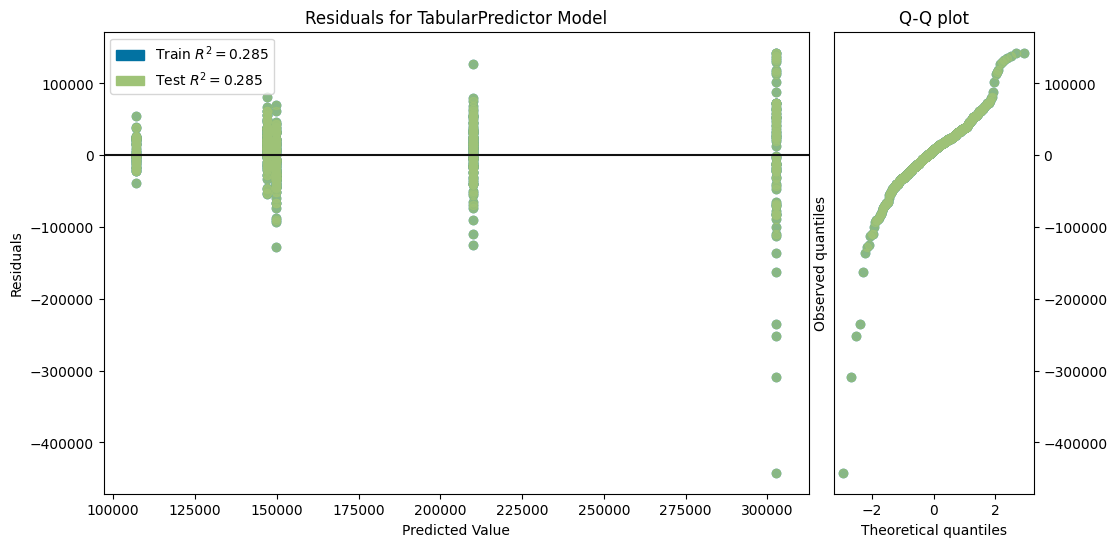

- 변수 overallqual만 해보자

df_tr_ = df_train[['OverallQual','SalePrice']]

df_ts_ = df_test[['OverallQual']]df_tr_| OverallQual | SalePrice | |

|---|---|---|

| 0 | 7 | 208500 |

| 1 | 6 | 181500 |

| 2 | 7 | 223500 |

| 3 | 7 | 140000 |

| 4 | 8 | 250000 |

| ... | ... | ... |

| 1455 | 6 | 175000 |

| 1456 | 6 | 210000 |

| 1457 | 7 | 266500 |

| 1458 | 5 | 142125 |

| 1459 | 5 | 147500 |

1460 rows × 2 columns

# step1 -- pass

# step2

predictr = TabularPredictor(label='SalePrice')

# step3

predictr.fit(df_tr_)

# step4

yhat = predictr.predict(df_tr_)

#yyhat = predictr.predict(df_ts_)No path specified. Models will be saved in: "AutogluonModels/ag-20231210_090017/"

Beginning AutoGluon training ...

AutoGluon will save models to "AutogluonModels/ag-20231210_090017/"

AutoGluon Version: 0.8.2

Python Version: 3.8.18

Operating System: Linux

Platform Machine: x86_64

Platform Version: #38~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Thu Nov 2 18:01:13 UTC 2

Disk Space Avail: 643.82 GB / 982.82 GB (65.5%)

Train Data Rows: 1460

Train Data Columns: 1

Label Column: SalePrice

Preprocessing data ...

AutoGluon infers your prediction problem is: 'regression' (because dtype of label-column == int and many unique label-values observed).

Label info (max, min, mean, stddev): (755000, 34900, 180921.19589, 79442.50288)

If 'regression' is not the correct problem_type, please manually specify the problem_type parameter during predictor init (You may specify problem_type as one of: ['binary', 'multiclass', 'regression'])

Using Feature Generators to preprocess the data ...

Fitting AutoMLPipelineFeatureGenerator...

Available Memory: 128082.22 MB

Train Data (Original) Memory Usage: 0.01 MB (0.0% of available memory)

Inferring data type of each feature based on column values. Set feature_metadata_in to manually specify special dtypes of the features.

Stage 1 Generators:

Fitting AsTypeFeatureGenerator...

Stage 2 Generators:

Fitting FillNaFeatureGenerator...

Stage 3 Generators:

Fitting IdentityFeatureGenerator...

Stage 4 Generators:

Fitting DropUniqueFeatureGenerator...

Stage 5 Generators:

Fitting DropDuplicatesFeatureGenerator...

Types of features in original data (raw dtype, special dtypes):

('int', []) : 1 | ['OverallQual']

Types of features in processed data (raw dtype, special dtypes):

('int', []) : 1 | ['OverallQual']

0.0s = Fit runtime

1 features in original data used to generate 1 features in processed data.

Train Data (Processed) Memory Usage: 0.01 MB (0.0% of available memory)

Data preprocessing and feature engineering runtime = 0.03s ...

AutoGluon will gauge predictive performance using evaluation metric: 'root_mean_squared_error'

This metric's sign has been flipped to adhere to being higher_is_better. The metric score can be multiplied by -1 to get the metric value.

To change this, specify the eval_metric parameter of Predictor()

Automatically generating train/validation split with holdout_frac=0.2, Train Rows: 1168, Val Rows: 292

User-specified model hyperparameters to be fit:

{

'NN_TORCH': {},

'GBM': [{'extra_trees': True, 'ag_args': {'name_suffix': 'XT'}}, {}, 'GBMLarge'],

'CAT': {},

'XGB': {},

'FASTAI': {},

'RF': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'XT': [{'criterion': 'gini', 'ag_args': {'name_suffix': 'Gini', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'entropy', 'ag_args': {'name_suffix': 'Entr', 'problem_types': ['binary', 'multiclass']}}, {'criterion': 'squared_error', 'ag_args': {'name_suffix': 'MSE', 'problem_types': ['regression', 'quantile']}}],

'KNN': [{'weights': 'uniform', 'ag_args': {'name_suffix': 'Unif'}}, {'weights': 'distance', 'ag_args': {'name_suffix': 'Dist'}}],

}

Fitting 11 L1 models ...

Fitting model: KNeighborsUnif ...

-51302.9262 = Validation score (-root_mean_squared_error)

0.04s = Training runtime

0.01s = Validation runtime

Fitting model: KNeighborsDist ...

-51302.9262 = Validation score (-root_mean_squared_error)

0.03s = Training runtime

0.0s = Validation runtime

Fitting model: LightGBMXT ...

-61288.6739 = Validation score (-root_mean_squared_error)

0.28s = Training runtime

0.0s = Validation runtime

Fitting model: LightGBM ...

-48771.8547 = Validation score (-root_mean_squared_error)

0.21s = Training runtime

0.0s = Validation runtime

Fitting model: RandomForestMSE ...

-47823.2599 = Validation score (-root_mean_squared_error)

0.25s = Training runtime

0.02s = Validation runtime

Fitting model: CatBoost ...

-47813.9877 = Validation score (-root_mean_squared_error)

0.13s = Training runtime

0.0s = Validation runtime

Fitting model: ExtraTreesMSE ...

-47823.2599 = Validation score (-root_mean_squared_error)

0.24s = Training runtime

0.02s = Validation runtime

Fitting model: NeuralNetFastAI ...

-47262.4708 = Validation score (-root_mean_squared_error)

0.67s = Training runtime

0.0s = Validation runtime

Fitting model: XGBoost ...

-47576.4125 = Validation score (-root_mean_squared_error)

0.09s = Training runtime

0.0s = Validation runtime

Fitting model: NeuralNetTorch ...

-47489.2325 = Validation score (-root_mean_squared_error)

1.25s = Training runtime

0.0s = Validation runtime

Fitting model: LightGBMLarge ...

-47629.3214 = Validation score (-root_mean_squared_error)

0.22s = Training runtime

0.0s = Validation runtime

Fitting model: WeightedEnsemble_L2 ...

-47251.0188 = Validation score (-root_mean_squared_error)

0.16s = Training runtime

0.0s = Validation runtime

AutoGluon training complete, total runtime = 3.77s ... Best model: "WeightedEnsemble_L2"

TabularPredictor saved. To load, use: predictor = TabularPredictor.load("AutogluonModels/ag-20231210_090017/")[1000] valid_set's rmse: 61288.7

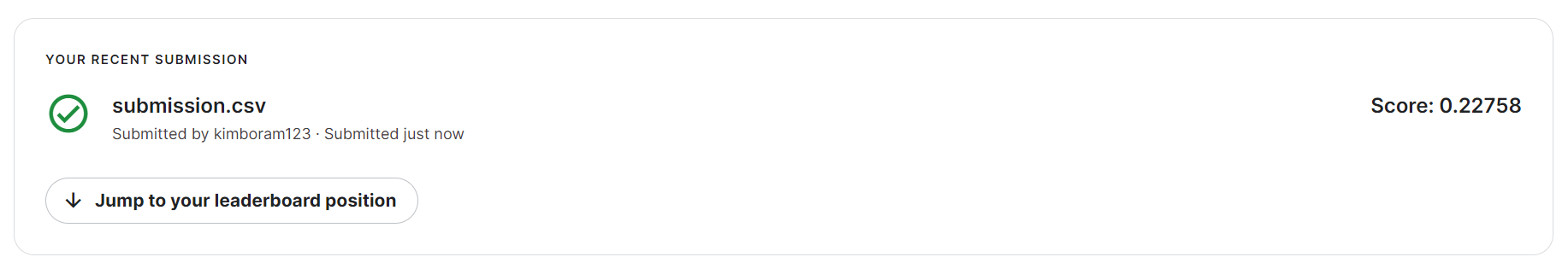

[2000] valid_set's rmse: 61288.7yyhat = predictr.predict(df_ts_)auto.quick_fit(

train_data=df_tr_,

label='SalePrice',

show_feature_importance_barplots=True

)No path specified. Models will be saved in: "AutogluonModels/ag-20231210_090127/"Model Prediction for SalePrice

Using validation data for Test points

Model Leaderboard

| model | score_test | score_val | pred_time_test | pred_time_val | fit_time | pred_time_test_marginal | pred_time_val_marginal | fit_time_marginal | stack_level | can_infer | fit_order | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | LightGBMXT | -52272.947289 | -50360.652261 | 0.000823 | 0.000755 | 0.215841 | 0.000823 | 0.000755 | 0.215841 | 1 | True | 1 |

Feature Importance for Trained Model

| importance | stddev | p_value | n | p99_high | p99_low | |

|---|---|---|---|---|---|---|

| OverallQual | 52258.930244 | 2494.060513 | 6.206537e-07 | 5 | 57394.235311 | 47123.625176 |

Rows with the highest prediction error

Rows in this category worth inspecting for the causes of the error

| OverallQual | SalePrice | SalePrice_pred | error | |

|---|---|---|---|---|

| 1182 | 10 | 745000 | 302755.9375 | 442244.0625 |

| 898 | 9 | 611657 | 302755.9375 | 308901.0625 |

| 440 | 10 | 555000 | 302755.9375 | 252244.0625 |

| 769 | 8 | 538000 | 302755.9375 | 235244.0625 |

| 1243 | 10 | 465000 | 302755.9375 | 162244.0625 |

| 1298 | 10 | 160000 | 302755.9375 | 142755.9375 |

| 458 | 8 | 161000 | 302755.9375 | 141755.9375 |

| 1211 | 8 | 164000 | 302755.9375 | 138755.9375 |

| 58 | 10 | 438780 | 302755.9375 | 136024.0625 |

| 991 | 8 | 168000 | 302755.9375 | 134755.9375 |

df_submission['SalePrice'] = yyhat

df_submission.to_csv("submission.csv",index=False)!kaggle competitions submit -c house-prices-advanced-regression-techniques -f submission.csv -m "overallqual"

!rm submission.csvWarning: Your Kaggle API key is readable by other users on this system! To fix this, you can run 'chmod 600 /home/coco/.kaggle/kaggle.json'

100%|██████████████████████████████████████| 21.4k/21.4k [00:01<00:00, 11.6kB/s]

Successfully submitted to House Prices - Advanced Regression Techniques

1110/50120.2214684756584198높아졌다잉